Datasets

Showdown Replay Logs

Showdown makes battle replays accessible via a public API. The competition organizers maintain curated datasets for convenience and (a little) extra privacy, and host them on Hugging Face to spare Showdown download requests from this competition.

We'd encourage you to use them unless you have a good reason not to.

Collectively, they cover the entire range of supported rulesets for the competition:

|

Formats |

Time Period |

Battles |

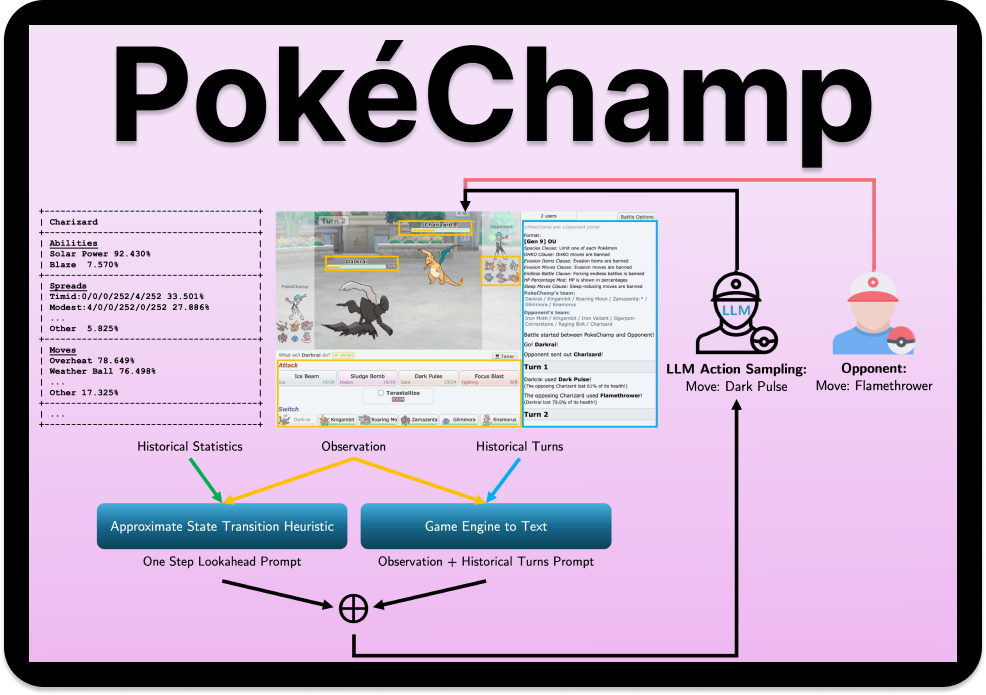

pokechamp |

Many (39+ (Gen 1-9 OU, Gen 9 VGC, and more)) |

2024-2025 |

2M |

metamon-raw-replays |

All PokéAgent Except VGC |

2014-2025 |

1.8M |

Replays as Agent Training Data

Showdown replays are saved from the point of view of a spectator rather than the point of view of a player,

which can make it difficult to use them directly in an imperfect information game like Pokémon.

We need to reconstruct (and often predict) the perspective of each player to create a more typical offline RL or imitation learning dataset.

metamon converts its replay dataset into a flexible format that allows customization of observations, actions, and rewards.

More than 3.5M full battle trajectories are stored on Hugging Face at

metamon-parsed-replays

and can be accessed through the metamon repo. pokechamp has a large, diverse set of replays from almost every gamemode that can be accessed at pokechamp-replays. Utilities in pokechamp can recreate its LLM-Agent prompts and decisions from replays in a similar fashion.

Miscellaneous

teams:

All of the Showdown rulesets in the competition require players to pick their own teams. This dataset provides sets of teams gathered from forums,

predicted from replays, and/or procedurally generated from Showdown trends. This creates a starting point for anyone less familiar with Competitive Pokémon,

and establishes diverse team sets for self-play.

usage-stats:

A convenient way to access the Showdown team usage stats

for the formats covered by the competition and the timeframe covered by the replay datasets.

This dataset

also includes a log of all the partially revealed teams in replays. Team prediction is an interesting subproblem that your method may want to address!

Baselines

Organizers will inflate the player pool on the PokéAgent ladder with a rotating cast of existing baselines covering a wide range of skill levels and team choices.

At launch, these will include:

- Simple Heuristics to simulate the low-ELO ladder and let new methods get started. These are mainly sourced from

metamon's basic evaluation opponents.

- The best

metamon and pokechamp agents playing with competitive team choices.

These baselines have already demonstrated performance comparable to strong human players. If all goes well, they will be at the bottom of the leaderboard by the end of the competition!

- Mixed

metamon and pokechamp agents aimed at increasing variety and forcing participants to battle teams that resemble the real Showdown ladder.

For example, varied LLM backends with several prompting and search strategies. Hundreds of checkpoints from metamon policies

at various stages of training, sampling from thousands of unique teams.

Launch baselines are already open-source, so you are free to skip the ladder queue by hosting them on your local server with help from their home repo.

Any additional (stronger) agents in development by the organizers will be added to the ladder rotation as the competition progresses.

We hope the ladder will also be full of participants trying new ideas; your agents' competition will always be improving!

Support

Competition staff will be active on the community Discord and are committed to answering questions (and fixing any issues that may arise)

related to the starter datasets, baselines, competition logistics, and Showdown/Pokémon more broadly.

However, due to limited bandwidth, we caution that the technical details involved in improving upon provided methods may be deemed out-of-scope

(e.g., RL training details beyond the provided documentation). This is mainly because, given the datasets and baselines, you would have many other viable options

that are maintained by larger teams and better suited to a broad audience. Still, please feel free to reach out, and we will help in any way we can.