Pokémon Battling as an AI Problem

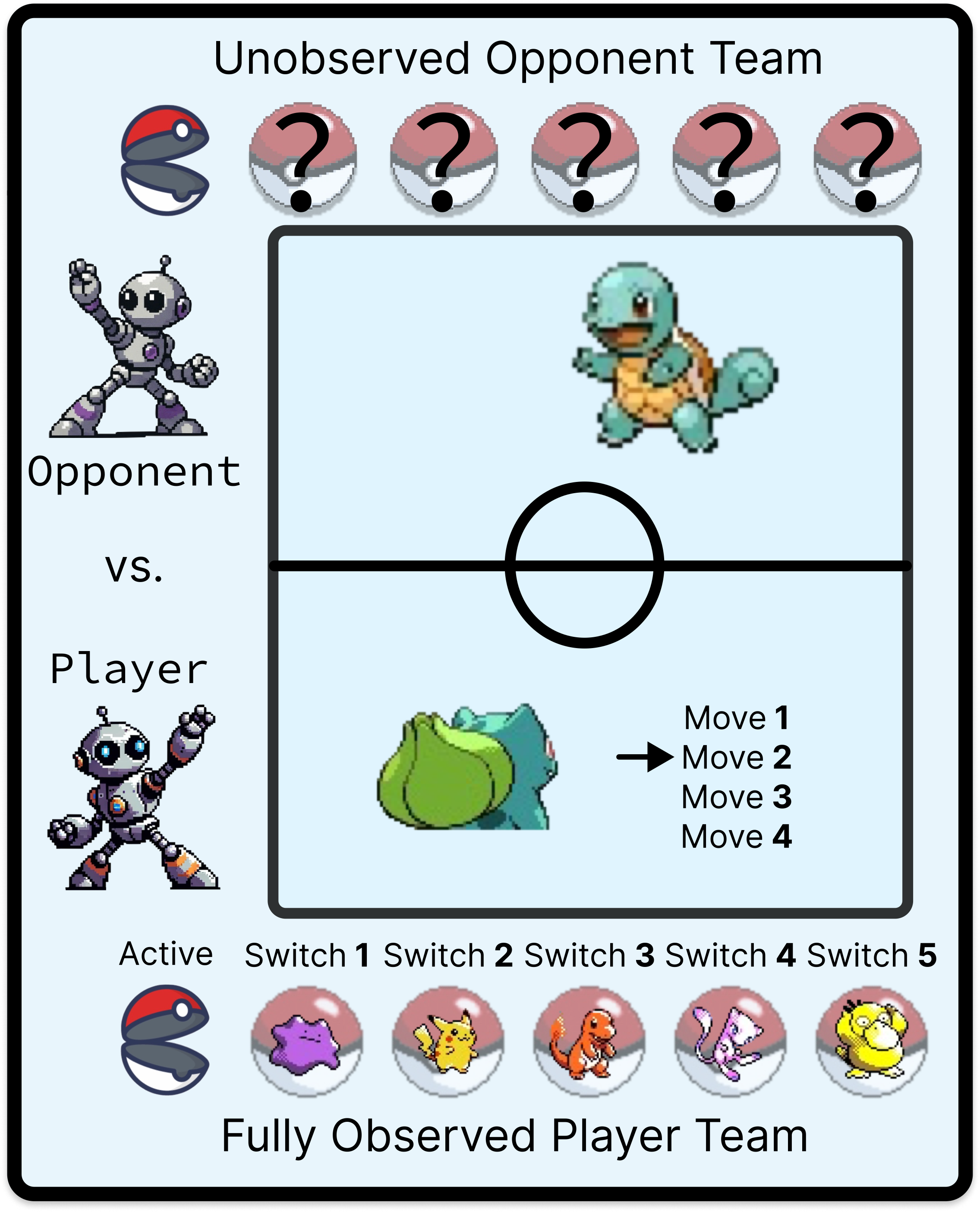

Competitive Pokémon turns the Pokémon franchise's turn-based combat mechanic into a standalone two-player strategy game. Players design teams of Pokémon and battle against an opponent. On each turn, they can choose to use a move from the Pokémon already on the field or switch to another member of their team. Moves can deal damage to the opponent, eventually causing it to faint, until the last player with active Pokémon wins.

As an AI benchmark, Pokémon is most defined by:

- Team Design: Teams are created by choosing six species from the hundred(s) that are available. Each Pokémon then needs four moves, an item, an ability, and custom statistics. Players design teams to counter common trends and then design new teams to counter those counters, and so on. The process of selecting a team and then battling with it is a challenging two-stage optimization problem, and the game is always evolving.

- Generalization: Diverse team combinations create an incredibly wide range of initial states, and each matchup its own strategic puzzle. Agents have to learn to adapt their strategy by weighing the strengths and weaknesses of their own team against the threats and opportunities presented by their opponent.

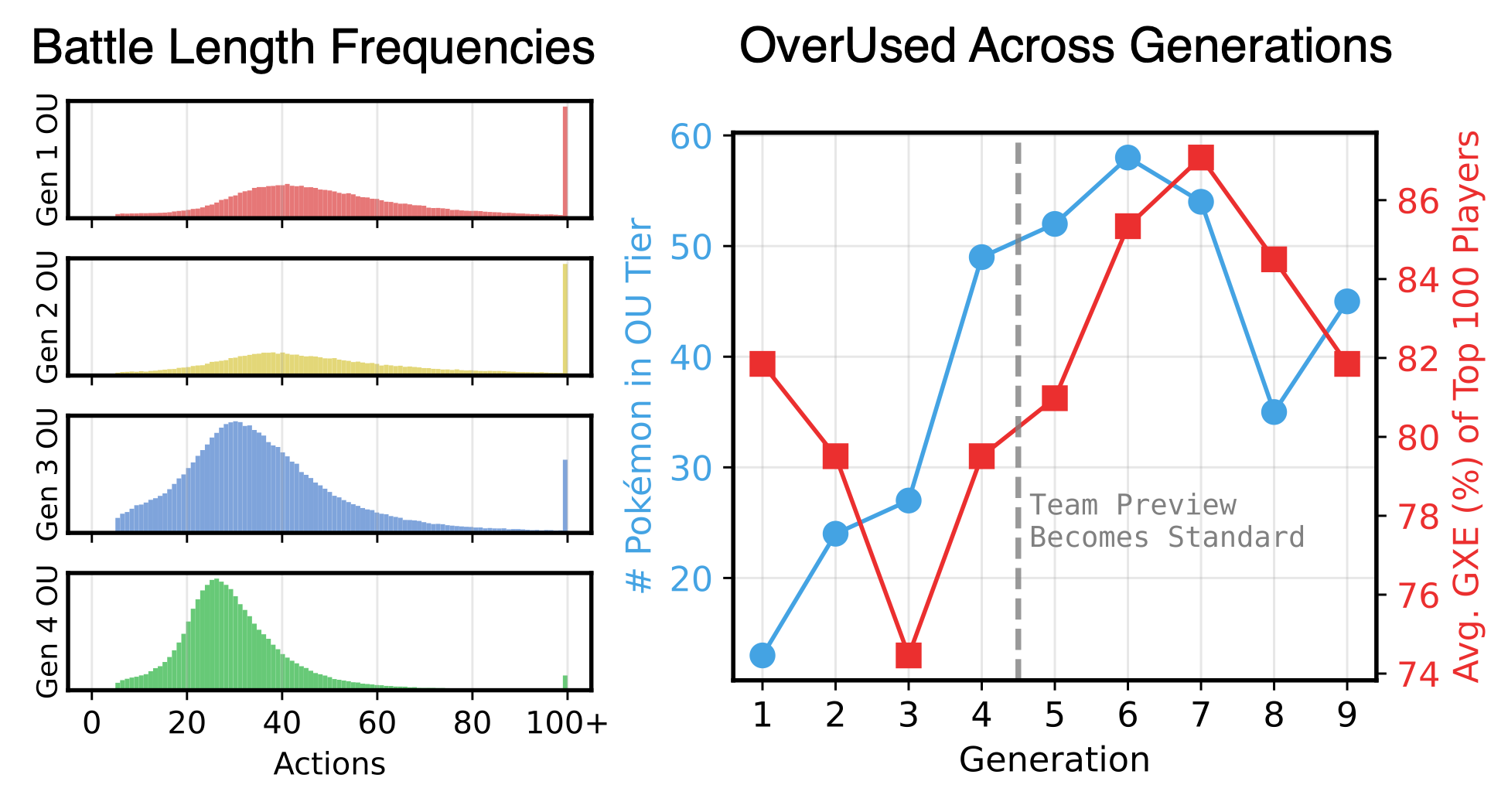

- Stochasticity: Turn outcomes are random; there are a lot of things that could happen after each move, and a single turn can make or break a battle. The better player does not always win. In fact, the very best players are (only) 75–90% favorites against a randomly sampled player, depending on the ruleset.

- Imperfect Information and Opponent Prediction: Battles revolve around team information that has or has not been revealed to the opponent. Inferring unrevealed Pokémon/items/moves can be a major advantage but requires detailed understanding of current team design trends. Pokémon is a simultaneous‑move game and the value of each action is highly dependent on the opponent's decision. Listen to any good player discussing their thought process during a battle and the main thing you'll notice is how much time they spend on team inference and move prediction. Here's one example (no need to watch more than a minute to get the idea): example commentary.

- Datasets: Between its battle replays, team design stats, forums, and wikis, Pokémon is a goldmine of naturally occuring training data.